Using Cluster Autoscaler

Introduction

The Cluster Autoscaler automatically adjusts the number of nodes in your Kubernetes clusters based on current workloads. When pods are pending due to insufficient capacity, new nodes are provisioned. When nodes are underutilized, they are removed to reduce resource waste and cost.

To enable automatic resource optimization, Syself Autopilot integrates with the Cluster Autoscaler on Cluster API.

For best results, use the Cluster Autoscaler in conjunction with Horizontal Pod Autoscalers (HPAs), which adjust pod replicas based on resource requests, resulting in pending pods when capacity is insufficient, triggering the Autoscaler.

Supported Configuration

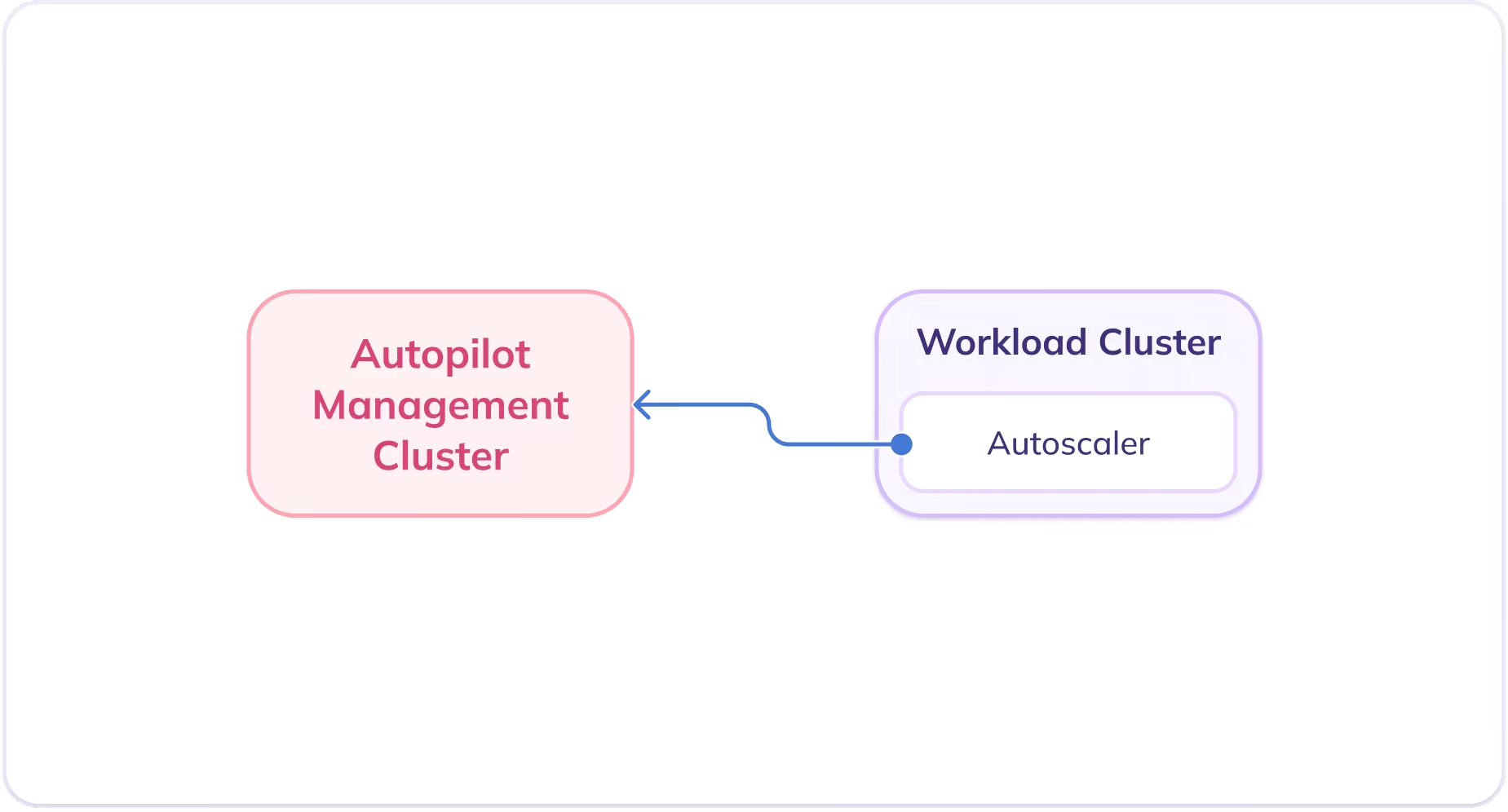

Syself Autopilot supports deploying the Cluster Autoscaler inside the workload cluster, configured to communicate with the management cluster using service account credentials.

This mode of operation is referred to as "incluster-kubeconfig". For additional details, refer to the Cluster Autoscaler Helm Chart documentation.

Installation Guide

Step 1: Secret Creation in the Workload Cluster

To allow the Cluster Autoscaler running in a workload cluster to authenticate with the Syself Autopilot management cluster, you must create a secret containing a kubeconfig file. This secret is templated using the existing cluster-autoscaler-sa-secret from the management cluster:

Step 2: Deploying the Cluster Autoscaler

Use the official Helm chart to install the Cluster Autoscaler into the workload cluster.

Add Helm Repository

Install Cluster Autoscaler

The values below reflect our recommended setup for typical use cases, but you are free to modify them as needed to fit your cluster's specific requirements.

Update the following configurations:

- Set the value of

autoDiscovery.labels[0].namespaceto match the namespace of your organization. Note that it should start with the prefixorg-followed by your organization's name. - Modify

autoDiscovery.clusterNameto reflect the name of your cluster.

To configure the Autoscaler behavior, specific flags can be adjusted. When deploying the Cluster Autoscaler with Helm, these flags can be passed using the extraArgs parameter.

For a full list of configuration options, please refer to the Cluster Autoscaler Helm chart documentation.

Step 3: Verification

To ensure that the deployment was successful, check if the pod is running using the following command:

Concept: Autoscaling Groups

An autoscaling group is a logical grouping of nodes that can be scaled independently. Each group:

- Maps to a single MachineDeployment

- Has a minimum and maximum number of nodes

- Is scaled by the Cluster Autoscaler by adjusting the replicas field of the associated MachineDeployment

On Syself Autopilot, you have two ways to define which MachineDeployments should be autoscaled: explicitly listing them under the autoscalingGroups Helm chart value:

Or using annotations directly in the cluster manifest:

In both examples above, Cluster Autoscaler will:

- Never reduce the number of nodes in this group below 1

- Never increase it above 10

- Automatically adjust the replicas field within that range based on workload demands

By default, Cluster Autoscaler does not enforce the node group size. If your cluster is below the minimum number of nodes configured for Cluster Autoscaler, it will be scaled up only when there are unschedulable pods. Similarly, if your cluster is above the maximum number of nodes configured, it will be scaled down only if it has unneeded nodes.

This behaviour can be changed for the minimum number using the enforce-node-group-min-size flag, which is already set to true on our recommended default values. When set, CA will scale node groups with fewer nodes to match the minimum.

Example Configurations

Bare Metal Base + Cloud Bursting with VMs

Always-on base capacity is handled by bare metal machines (high performance and lower price), and burst traffic is handled by cloud VMs.

ARM Node Pool for Cost-Efficient Workloads

Use x86 nodes for general-purpose workloads, and scale ARM nodes.

Isolated Frontend vs Backend Pools

Use fixed backend nodes, scale frontend nodes as traffic increases.

Getting Logs and Status

The Cluster Autoscaler exposes insights on scaling decisions through the cluster-autoscaler-status ConfigMap. You can check it with this command:

Under data.status.clusterWide there will be cluster-wide information about the health, and scaling decisions. If you want to find information about a specific node group, you can search for it on the data.status.nodeGroups array.

If you want to see the logs of Cluster Autoscaler, you can do so with the following command:

Cluster Autoscaler logs can be hard to read since each individual action taken by the controller is logged. Checking the logs is more useful if you suspect something is wrong with CA, and want to search for errors. You can filter only log lines containing the word error with this command:

Frequently Asked Questions

When does Cluster Autoscaler change the size of a cluster?

A scale up is triggered when pending pods cannot be scheduled due to insufficient resources.

A scale down is triggered when a node is underutilized and pods can be rescheduled elsewhere or are not running at all (e.g., due to PDBs or affinity constraints).

How can I monitor Cluster Autoscaler?

Metrics are exposed via the Prometheus-compatible endpoint /metrics on the CA pod.

Key metrics:

- cluster_autoscaler_unschedulable_pods_count

- cluster_autoscaler_nodes_count

- cluster_autoscaler_nodegroup_min_size

How does Horizontal Pod Autoscaler work with Cluster Autoscaler?

The Horizontal Pod Autoscaler (HPA) adjusts the number of pod replicas in a Deployment or ReplicaSet based on metrics like CPU utilization. When load increases, HPA scales out by creating more pods. If there aren't enough available resources in the cluster to schedule these pods, the Cluster Autoscaler (CA) will detect the unschedulable state and attempt to add new nodes to accommodate them. Conversely, when load decreases, HPA reduces the number of replicas. This can leave some nodes underutilized or completely idle, in which case CA may remove those nodes to reduce resource waste and cost.

How to prevent Cluster Autoscaler from scaling down a specific node?

A node will be excluded from scale-down if it has this annotation:

What are the best practices for running Cluster Autoscaler?

Running Cluster Autoscaler effectively requires a few key practices to ensure stability, efficiency, and responsiveness to workload demands. First, it’s important to use multiple node groups (or machine deployments) to give CA flexibility in scaling decisions. By having different machine types and failure domains, CA can select the most suitable node group when scaling up or down. This is especially useful for workloads with diverse resource requirements.

Another crucial aspect is avoiding DaemonSets that run on every node unless they’re absolutely necessary. CA considers all pods when evaluating node utilization, and DaemonSets can make nodes appear busier than they are, preventing scale-down. Similarly, configure podDisruptionBudgets (PDBs) carefully. Overly strict PDBs can block CA from removing nodes even when they're otherwise safe to terminate.

It’s also important to ensure that workloads you expect to trigger scaling are actually unschedulable when the cluster is full. For example, if a pod has a toleration for taints but no matching node exists, CA may not react as expected. Always test autoscaling behavior under simulated load to catch such edge cases.

Finally, monitor CA logs and metrics regularly. While CA is designed to be hands-off, visibility into its decisions helps in tuning performance and catching configuration issues early.